Stereoscopic vision and sophisticated data processing in the brain enables humans to sense their environment in three dimensions. An increasing number of emerging technologies also demands this 3D-Sensing capability from machines and systems, often with stringent requirements for the safety and reliability of each method.

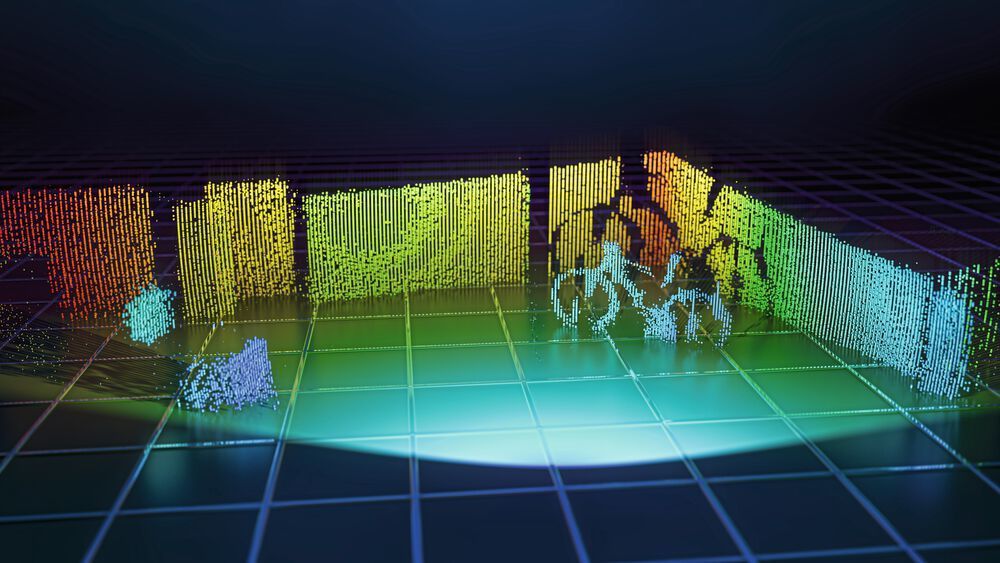

A popular and proven approach to 3D-Sensing, the 3-dimensional detection of the environment, is distance determination by measuring the time of flight of light, the time-of-flight (ToF) method. Here, a laser pulse is emitted, reflected by an object and then detected on an image sensor. The distance of the object can be determined from the duration of the time of flight and the speed of light. As LiDAR (from Light Detection and Ranging), this method is already frequently used, for example, in driver assistance systems, smartphones and robotics. More about this application in the field of "autonomous driving" can be found here.

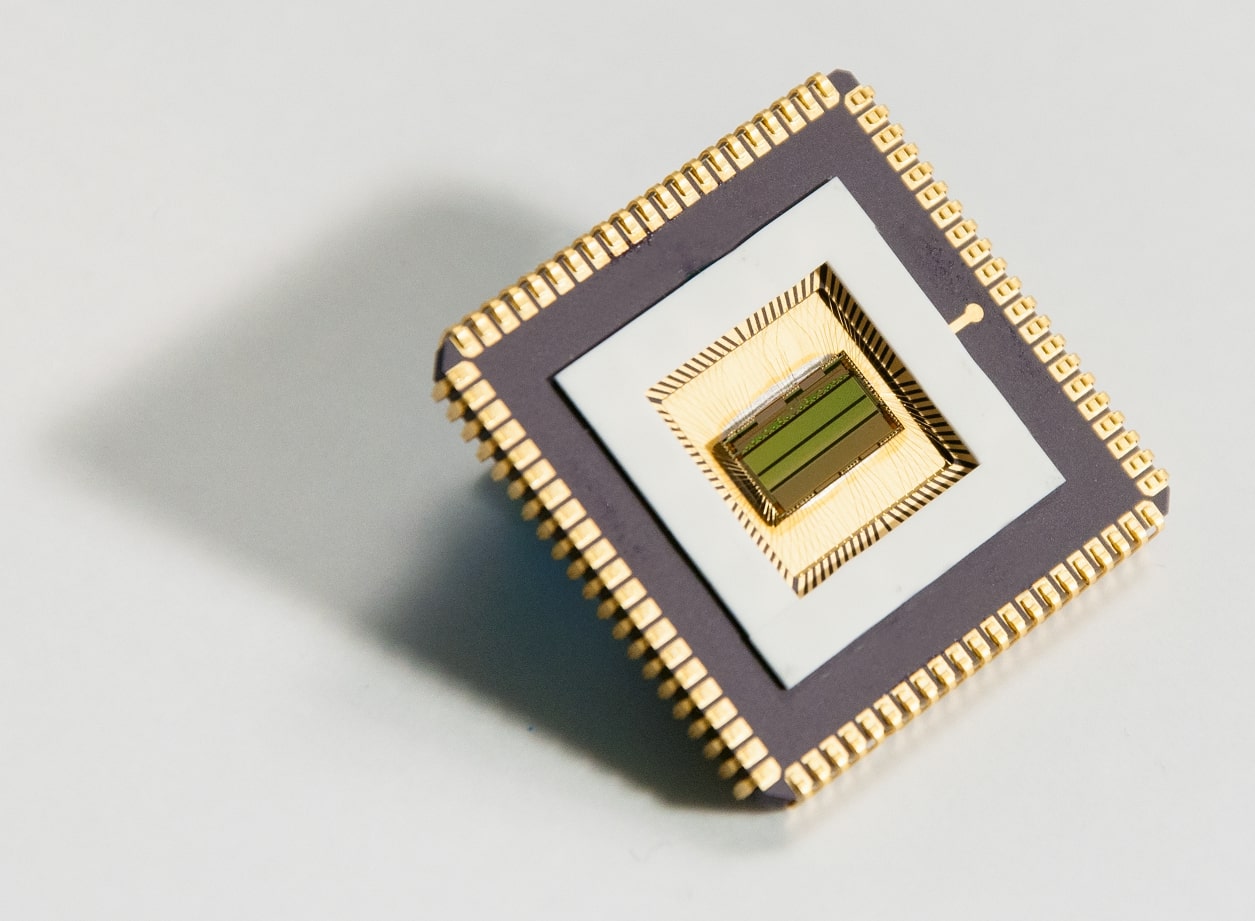

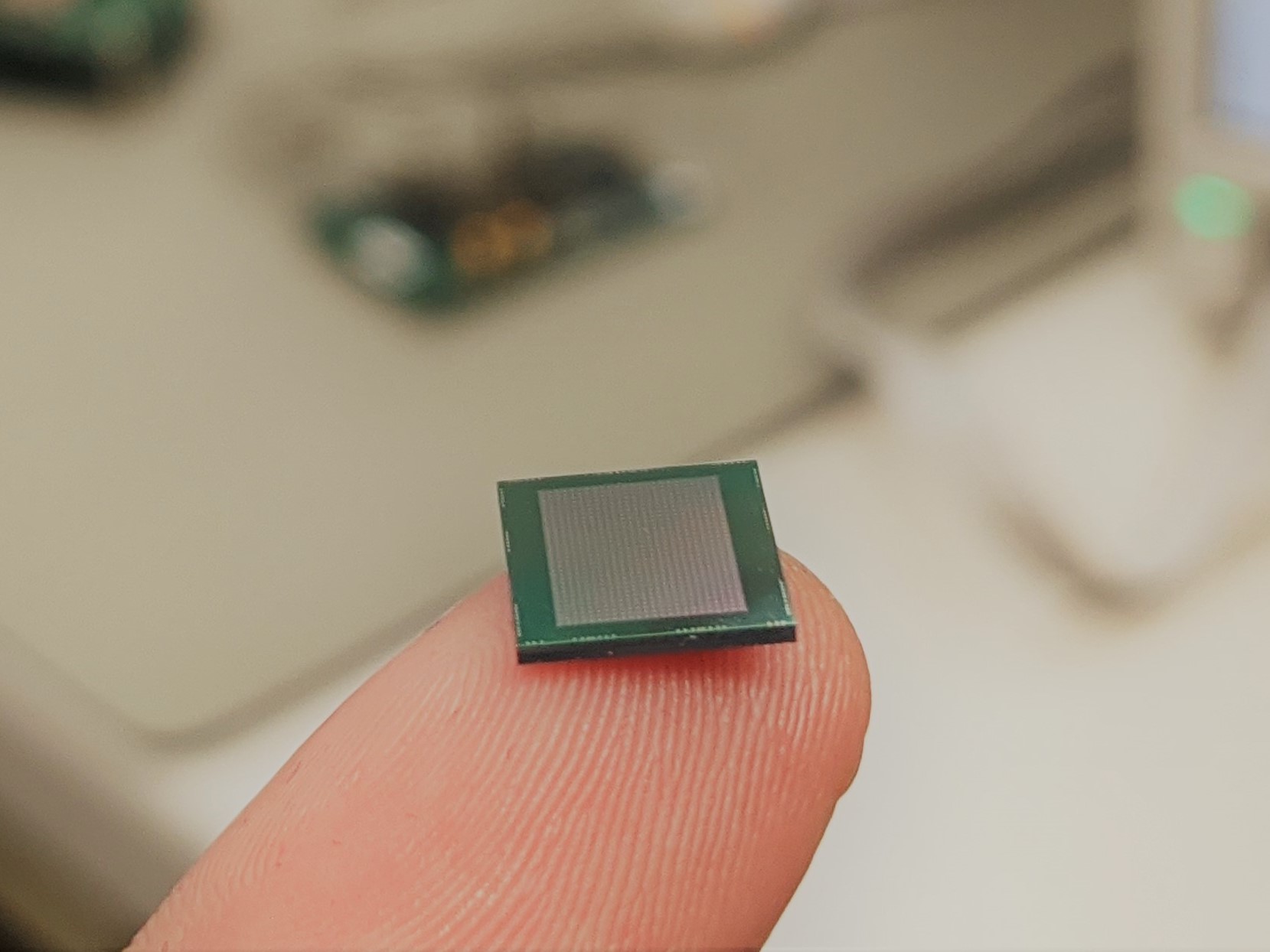

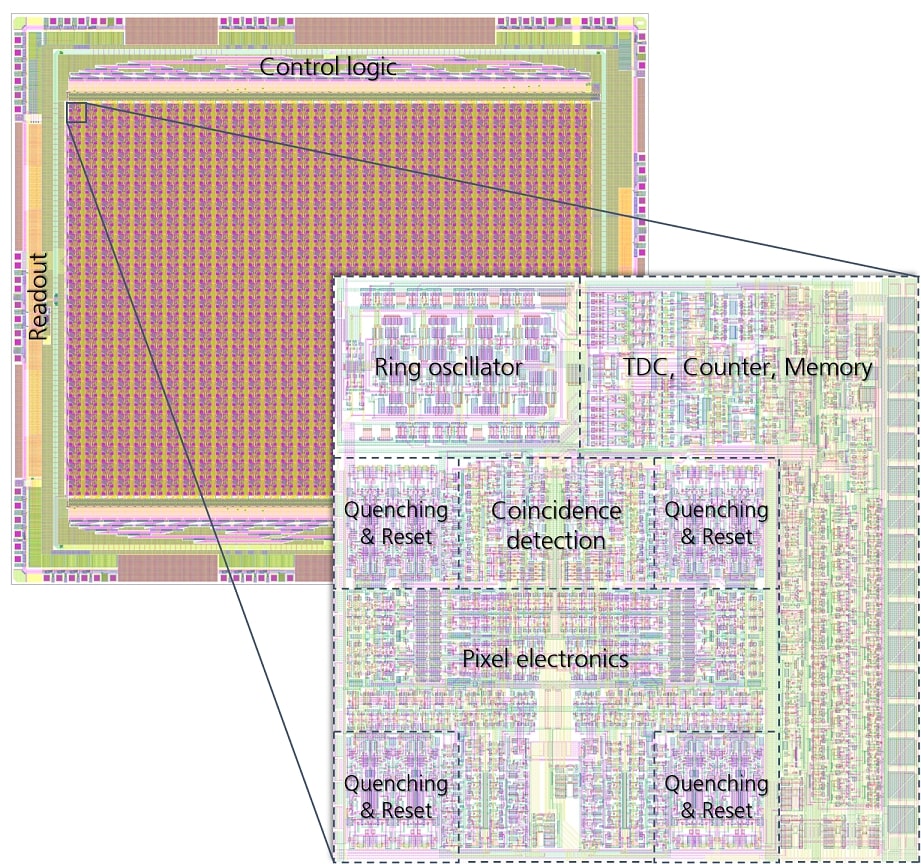

The advanced development of lasers and the high speed of light allow in principle very fast distance determination and thus 3D-Sensing in real time. However, this places very high demands on the image sensor used, which must have the ability to reliably detect photons while determining the time of arrival with high resolution. In addition, if the distances of different points in space are to be determined, multiple pixels with independent time-determining elements are required on the image sensor.

Especially when LiDAR is used in autonomous vehicles, there are additional challenges, such as the background light of the sun, which can vary greatly and must still be distinguished from the laser light, which pose additional challenges to the image sensor. Since the transmitter (laser) side is largely limited here by the eye safety requirement, the main focus is on optimizing the receiver (image sensor) and subsequent data processing.