Resource-saving programming

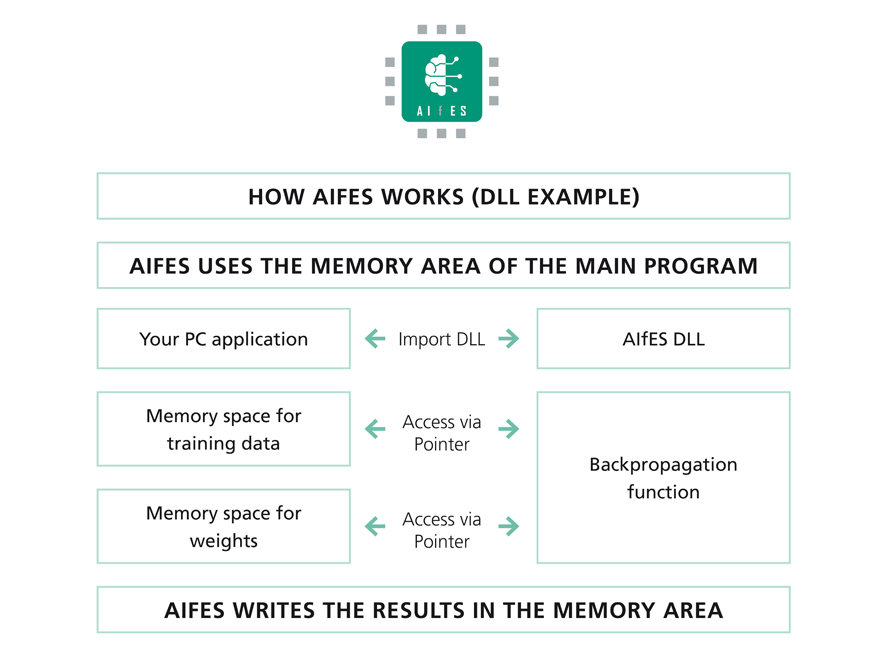

AIfES functions explicitly work with pointer arithmetic and only declare the most necessary variables in a function. This means, the storage areas for the training data and the weights are provided by the main program. AIfES functions access these memory areas by passing a pointer without requiring large resources themselves.

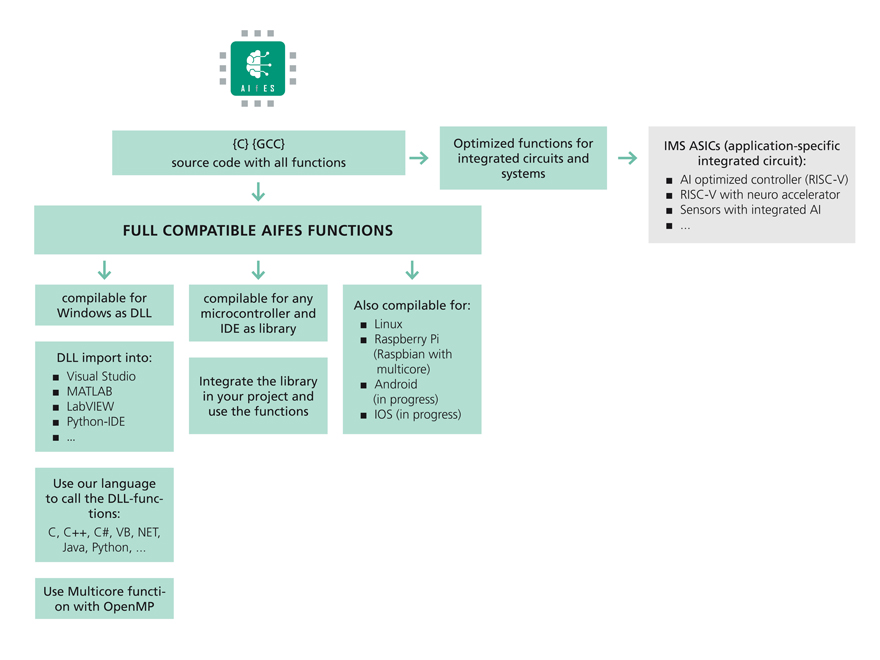

Platform independent and compatible

Due to the compatible programming with the GCC a porting to almost all platforms is possible. This enables the completely self-sufficient integration including the learning algorithm on an embedded system.

For use under Windows, for example, the source code can be compiled as a »Dynamic Link Library« (DLL) so that it can be integrated into software tools such as LabVIEW or MATLAB. Especially the direct connection to MATLAB is helpful to test e.g. different data preprocessings.

The integration in different software development environments like Visual Studio or a Python-IDE is also possible. The main program, which binds the DLL can therefore also be in a different programming language such as C++, C#, Python, VB.NET, Java.

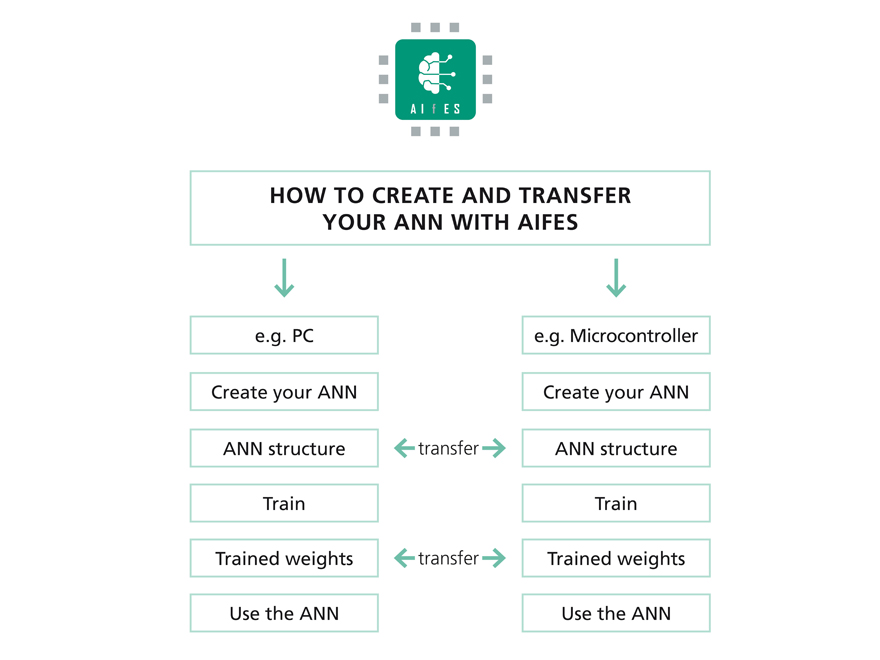

For the first development of the individual FNN the Computer as a platform is a suitable choice to perform fast calculations. After the right configuration is completed the porting to the embedded system can be conducted.

A small selection of platforms and microcontrollers AIfES was already tested with:

- Windows (DLL)

- Raspberry Pi with Raspbian

- Arduino UNO

- Arduino Nano 33 BLE Sense

- Arduino Portenta H7

- ATMega32U4

- STM32 F4 Series (ARM Cortex-M4)