Demonstrator for a personalizable gesture recognition which can be trained directly in the system.

The Fraunhofer IMS is researching on a personalizable artificial intelligence (AI), which offers the possibility that devices can be adapted or optimized to their user by means of training. Since the AI software framework AIfES is able to train artificial neural networks (ANN) on e.g. microcontrollers, the technical basis for this has already been developed.

The AIfES development team has used our gesture recognition demonstrator as a basis to show the potential of a personalizable AI. This demonstrator has already been presented at trade fairs and a video demonstrating the functionalities is available on the AIfES YouTube channel. The demonstrator can recognize numbers written in the air and send a corresponding command wirelessly to a robotic arm. A disadvantage here is that the gestures have to be executed by the user as they were pre-trained. For intuitive use, it is advantageous to be able to train individual gestures directly in the system without the need for a PC or other device. This is exactly what has been implemented with the new demonstrator.

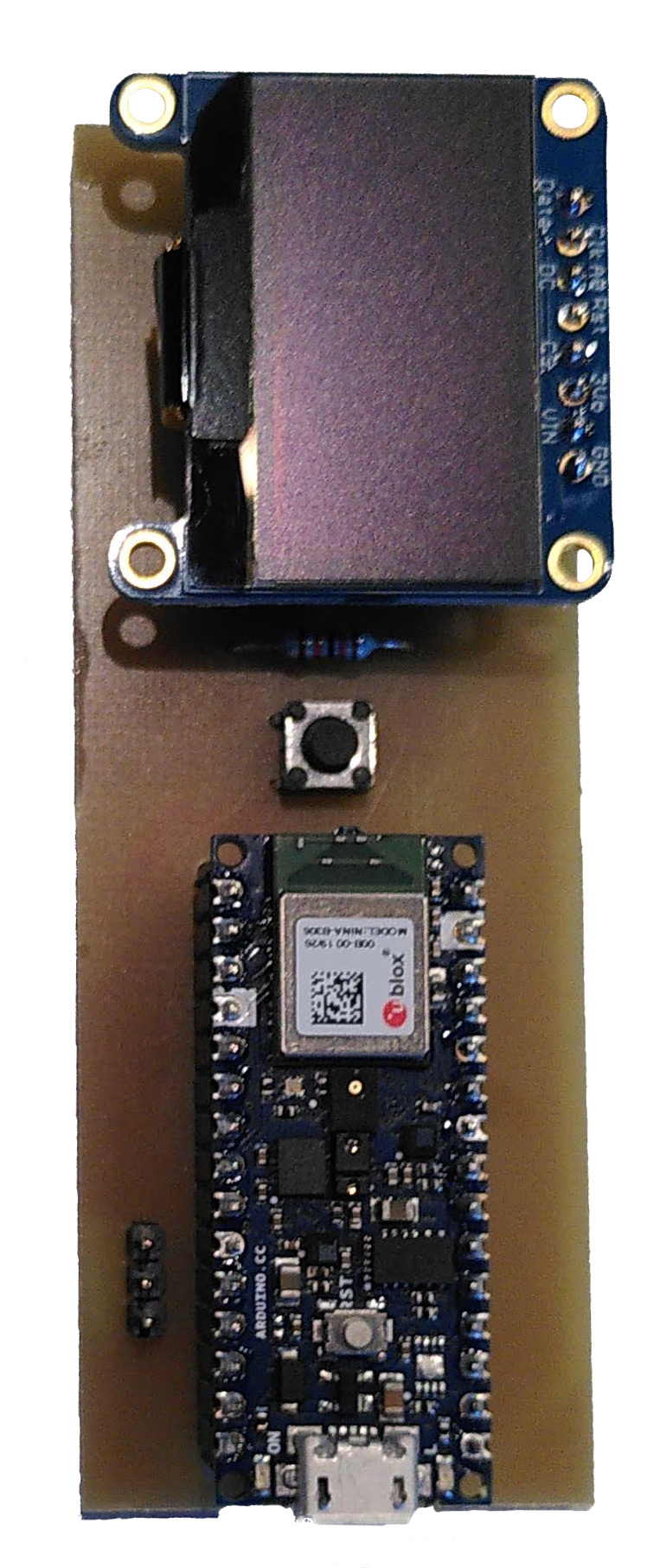

The Arduino Nano 33 BLE Sense development board was used for the implementation. For gesture recognition, we only used the acceleration data of the integrated 9-axis iNEMO inertial module (IMU). To be able to give feedback to the user during training, an OLED display was used. For operation, a simple button was integrated, which was mounted with the other components on a custom milled PCB to improve usage.

The user can put the demonstrator into learning mode at startup. In this mode, the user is guided through the training sequence, where he can record training data for up to ten gestures. The number of gestures that can be trained is limited by the memory of the microcontroller. Each gesture should be repeated about four times to ensure training success. After each executed gesture, the raw data is processed and only the necessary features are temporarily stored as training data. The special feature extraction algorithm was developed by the AIfES team and allows an enormous data reduction. These features form the later inputs for the very compact ANN. After all desired gestures have been performed, AIfES computes the necessary network structure, creates the matching ANN at runtime and trains it from scratch. The network structure depends on the number of gestures, because each gesture forms a class and this class is represented by an output neuron of the ANN. After training, the learning success can be verified using the learning error and the network structure, as well as the optimized weights, are stored. For example, training three gestures takes about 2 seconds on the Arduino Nano 33 BLE Sense using the ADAM algorithm in AIfES.

After training, the demonstrator is in inference mode to recognize gestures. The recognition of a gesture needs about 20-100 ms on the used hardware, this depends on how long a gesture is or how many measurements are taken. A demo video of this demonstrator will be available soon on the AIfES YouTube channel.

Potential applications:

- Detection of complex gestures and movements

- Evaluation of motion sequences

- Free-hand machine control

- Intuitive control of wearables

- Automatic control of medical devices

- HMI for gaming applications

- Smart building applications