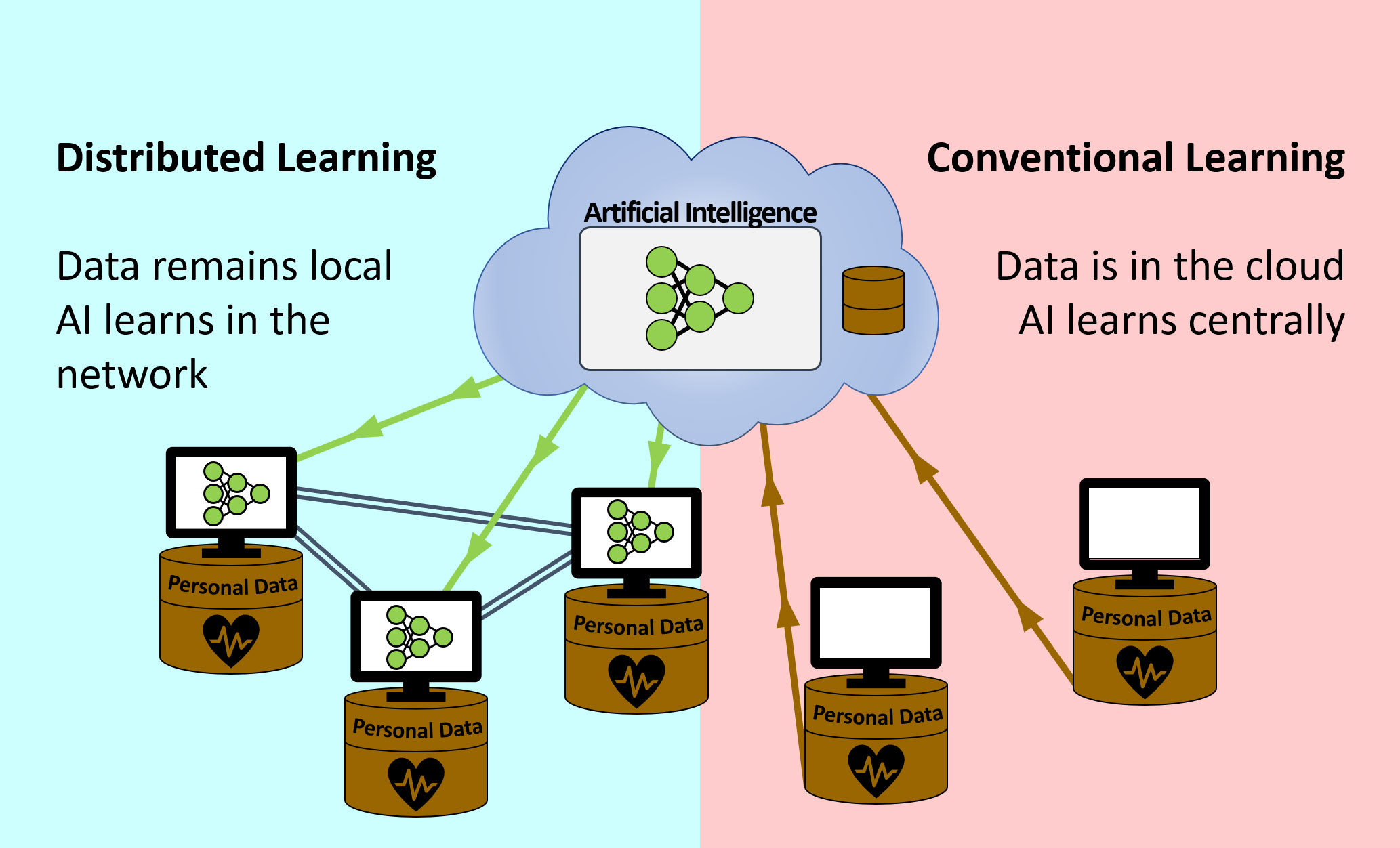

Modern Artificial Intelligence (AI) methods are a powerful tool to address the complex problems in our society. In this process, ever-deeper neural networks also require ever-larger and more diverse data sets. This alone presents a huge challenge. As a result, data-processing companies are collecting more and more data for AI training. While it is currently standard to send all data to a central cloud, Fraunhofer IMS is working on a different solution - distributed learning, even working on resource-restricted embedded systems.

- Data remains in your own hands

In healthcare and other fields of application, data protection is a decisive criterion. Legal requirements and also the information culture of companies prohibit sharing data with other participants.

Therefore, in distributed learning the network comes to the user. Sensitive data is used for training on the user's own devices. Afterwards only the learning success achieved is transmitted, so that all participants do not have to give away their own data.

- Profit from the knowledge of others

After the training the individual learning progresses are merged into a common model. This is then distributed again. In this way each participant benefits from the knowledge of the others without revealing their own data.

- Application in real time

A decisive advantage is additionally that the participants can learn independently from each other. We are working on making this vision a reality. One example is handwriting recognition on multiple devices. The goal is to recognize characters such as letters and numbers. Even if all participants are taught different numbers, in the end everyone benefits from the distributed learning approach and can recognize all numbers correctly. In this way more data can be recorded and processed even on small scales and within a shorter period of time. This is because in real-time applications bandwidths and latency limit the use of cloud solutions.

- Fraunhofer IMS stands up for your data

Fraunhofer IMS is working on reducing the communication effort even further without having to accept a noticeable reduction in the quality of the common model. Furthermore, research is being conducted on methods to additionally increase resilience. All this enables the use of distributed learning in a data-sensitive environment.